[ad_1]

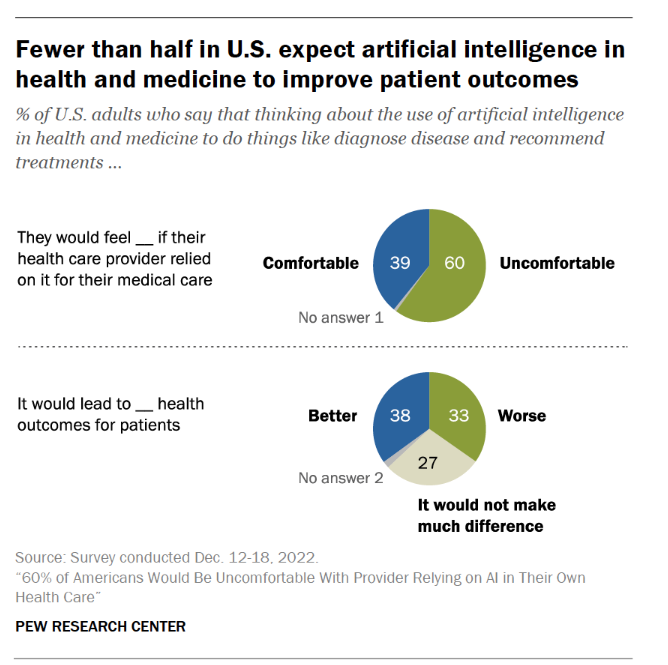

Most U.S. well being citizens assume AI is currently being adopted in American wellness treatment also speedily, experience “significant discomfort…with the notion of AI getting applied in their very own well being care,” according to consumer reports from the Pew Research Heart.

The top-line is that 60% of Us citizens would be not comfortable with [their health] supplier relying on AI in their own treatment, discovered in a consumer poll fielded in December 2022 among the over11,000 U.S. older people.

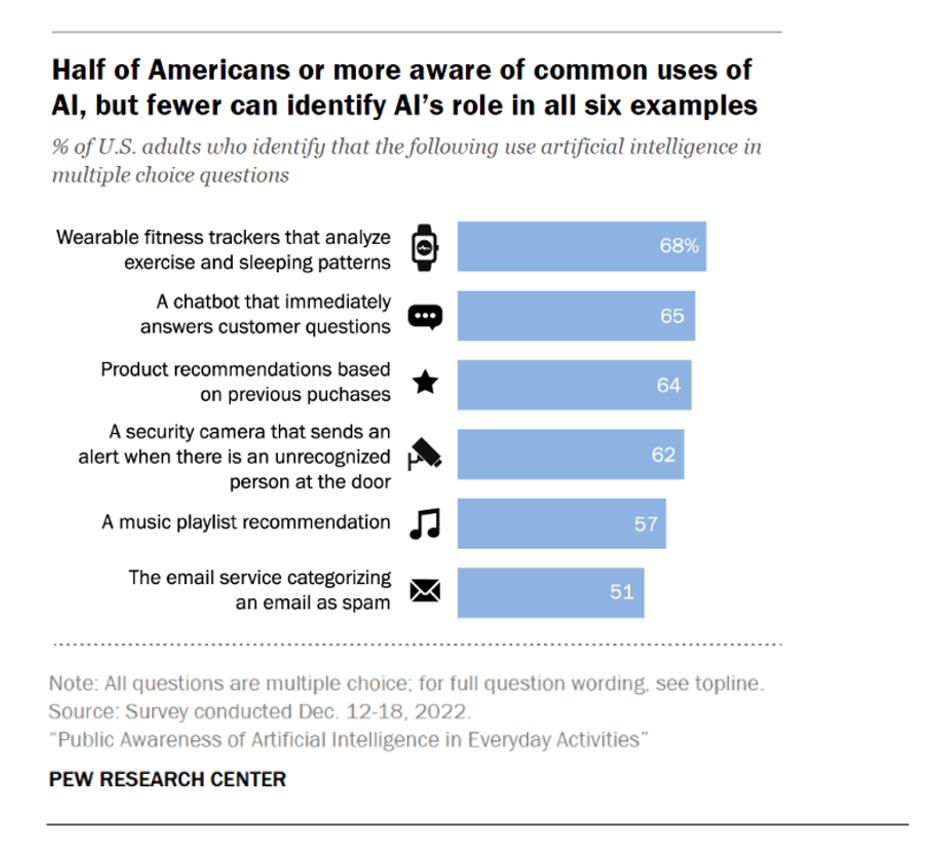

Most individuals who are conscious of popular takes advantage of of AI know about wearable physical fitness trackers that can review exercising and rest patterns, as a top wellness/wellness software for the technology. Other commonly-acknowledged illustrations of AI in each day living provided chatbots that respond to consumers’ questions, item recommendations based on prior purchases, and safety cameras that mail alerts when they “see” an unrecognized human being at the doorway.

Pew Investigate Middle has revealed various analyses centered on the information gathered in the December 2022 consumer survey. I’ll target on a number of of the wellness/treatment knowledge details in this article, but I endorse your examining the entire established of studies to get to know how U.S. shoppers are hunting at AI in day-to-day daily life.

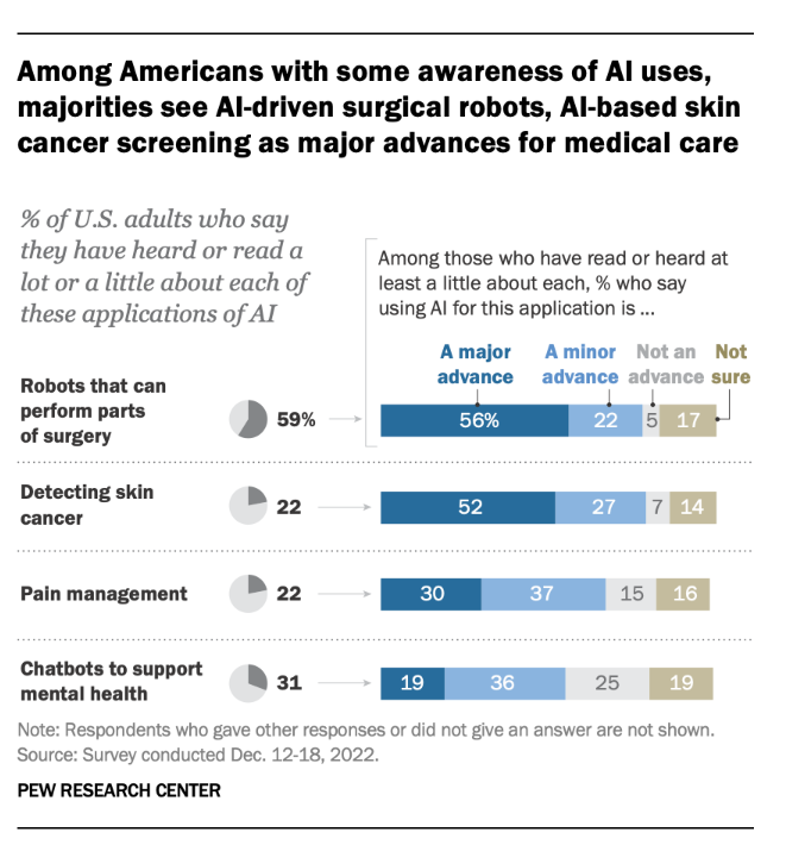

Among the U.S. consumers who are conscious of AI applications, more than 1-fifty percent view robots that can execute medical procedures as very well as AI resources that can detect pores and skin cancer depict important innovations in professional medical care.

For suffering administration and chatbots for mental health and fitness? Not so much important advancements as slight kinds which one-third of buyers perceive.

For mental health, 46% of people imagined AI chatbots should only be applied by men and women that are also looking at a therapist. This view was held by roughly the same proportion of men and women who had heard about AI chatbots for mental health and fitness as all those who did not know about the use of AI chatbots for mental overall health.

Significantly less than 50 percent of individuals expect AI in overall health and medication to boost patient results. Observe that a person-3rd of persons expect AI to direct to even worse wellbeing results.

Professional medical problems continue to challenge American health care. About 4 in 10 people view the opportunity for AI to reduce the variety of mistakes manufactured by overall health treatment providers as very well as the opportunity for AI to tackle well being treatment biases and well being fairness.

This is an significant opportunity for AI purposes to address in U.S. health care: two in 3 Black older people say bias centered on patients’ race or ethnicity continues to be a key trouble in wellbeing and medication, with an extra 1-quarter citing bias as a minimal issue, located in the Pew examine.

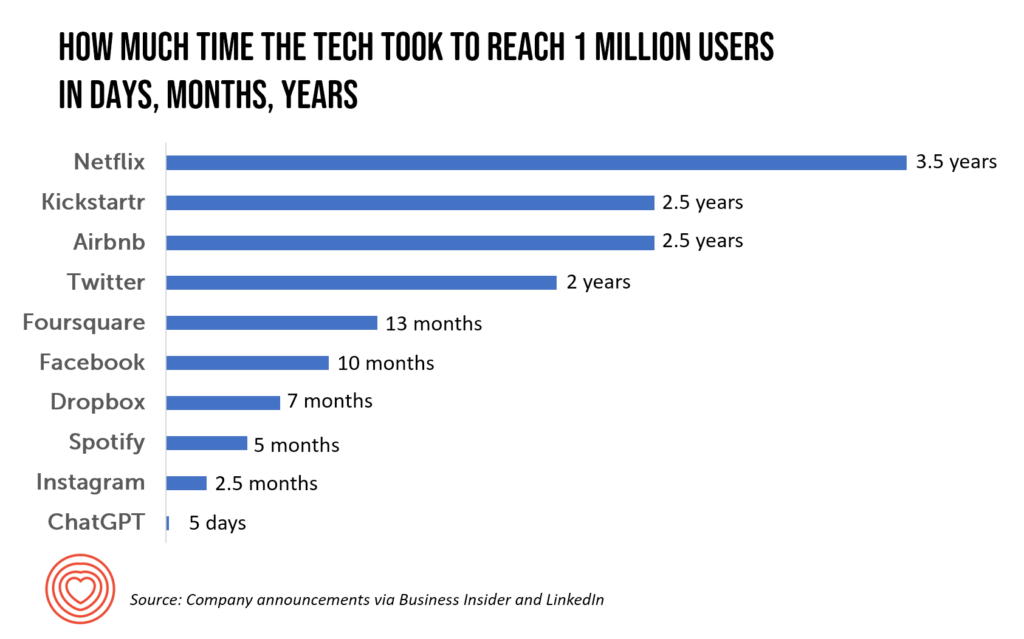

Wellness Populi’s Warm Factors: As business proceeds to go faster and quicker toward AI, and presently the hockey-adhere S-curve of ChatGPT adoption, mass media are covering the situation to enable individuals maintain up with the technological innovation. United states Right now released a properly-balanced and -investigated piece titled “ChatGPT is poised to upend health-related details. For improved and worse.”

In her coverage, Karen Weintraub reminds us: “ChatGPT introduced its investigate preview on a Monday in December [2022]. By that Wednesday, it reportedly already had 1 million customers. In February, each Microsoft and Google announced plans to involve AI applications similar to ChatGPT in their search engines.”

Dr. Ateev Mehrotra of Harvard and Beth Israel Deaconess, often a force of great info and evidence-dependent healthcare, added, “The best detail we can do for sufferers and the common public is (say), ‘hey, this may perhaps be a beneficial resource, it has a great deal of helpful details — but it typically will make a error and do not act on this facts only in your selection-earning course of action.”

And Dr. John Halamka, now heading up the Mayo Clinic Platform, concluded that, though AI and ChatGPT won’t replace them, “doctors who use AI will in all probability switch doctors who really do not use AI.” Dr. Halamka also not too long ago talked over AI and ChatGPT developments in wellness treatment on this AMA Update webcast.

From the patient’s issue of check out, check out out Michael L. Millenson’s column in Forbes talking about how in cancer, AI can empower clients and modify their associations with doctors and the treatment method.

A modern essay in The Discussion, co-composed by a medical ethicist, explored a range of moral concerns that Chat-GPT’s adoption in overall health treatment could current. Privacy breaches of affected individual information, erosion of client trust, how to accurately make evidence of the technology’s usefulness, and assuring basic safety in heath treatment shipping are among the the challenges the authors phone out, as very well as the likely of even further entrenching a digital divide and health and fitness disparities.

Vox published a piece this week arguing for slowing down the adoption of AI. Sigal Samuel asserted, “Pumping the brakes on artificial intelligence could be the very best factor we at any time do for humanity.” He argues that, “Progress in synthetic intelligence has been going so unbelievably rapid lately that the issue is turning into unavoidable: How very long till AI dominates our environment to the stage exactly where we’re answering to it somewhat than it answering to us?”

Sigal de-bunks 3 objections AI proponents increase in this bullish, go-go nascent phase of adoption:

- Objection 1: “Technological development is unavoidable, and trying to gradual it down is futile”

- Objection 2: “We never want to shed an AI arms race with China,” and,

- Objection 3: “We need to have to play with sophisticated AI to determine out how to make highly developed AI safe and sound.”

Yesterday, Microsoft and Nuance introduced their automatic medical documentation software embedded with GPT-4 and the “conversational” (i.e., chat) design.

Offered the rapidly-paced adoption of AI in clinical care, and ChatGPT as a use scenario, we will all be impacted by this technologies as people and caregivers, and personnel in the health and fitness/care ecosystem. It behooves us all to remain current, remain honest and transparent, and stay open up to learning what performs and what does not. And to ensure rely on amongst clients, clinicians and the more substantial overall health treatment procedure, we need to function with a feeling of privateness- and equity-by-style, respecting peoples’ feeling of values and value, and act with transparency and respect for all.

In hopeful manner, Dr. John Halamka concluded his dialogue on the AMA Update with this optimistic see: “So how about this—if in my generation, we can acquire out 50% of the stress, the upcoming generation will have a pleasure in observe.”

Concluding this discussion for now, wishing you pleasure for your work- and everyday living-flows!

[ad_2]

Supply backlink